Q radar (Security Information and Event Management)

IBM® Security QRadar® SIEM is a network security management platform that provides situational awareness and compliance support. QRadar SIEM uses a combination of flow-based network knowledge, security event correlation, and asset-based vulnerability assessment.

QRadar SIEM provides extensive visibility and actionable insight to help protect networks and IT assets from a wide range of advanced threats. It helps detect and re-mediate breaches faster, address compliance, and improve the efficiency of security operations.

To get started, configure a basic Q Radar SIEM installation, collect event and flow data, and generate reports.

Basic Architecture of Q radar SIEM:

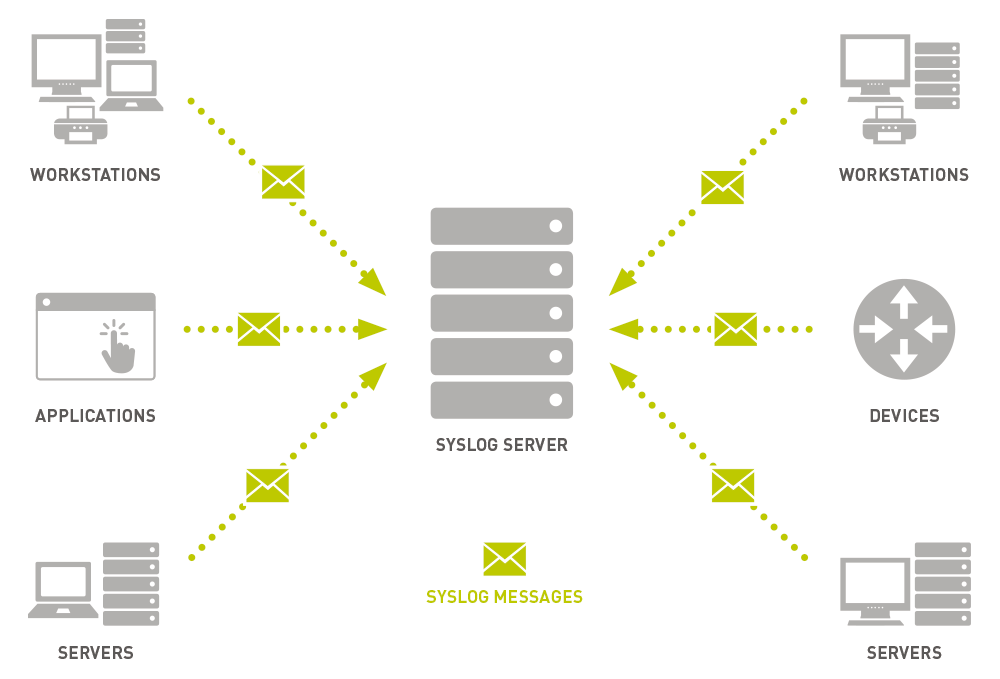

1) Log sources >>

We have third party log sources that will send data to Q Radar for collection , storage , parsing and processing . We can configure Q Radar to accept logs . A log source is a data source from which we log event is created .If a log source is not automatically discovered, you can manually add log source to receive events from your network devices , applications , anything and everything.

If there are devices which are very specific or custom build by customer (Devices which are not easy to integrate like juniper , Forty-gate etc ). To get logs from these kind of devices we need Universal DSM. For these kind of devices , sometimes we need to write regex to parse the logs.

Log sources are configured to receive events from different log sources protocols like ( SNMP, SYSLOGS, JDBC "Java Database Connectivity" , OPSEC "Open Platform for security")

DSM guide will give us exact information to configure log sources.

2) License Filter (License Throttle)>>

Events are received by the Event collector and the first filter applied on that is License filter. License filter monitor the number of events entering into system.

It only allows number of events mentioned in EPS (Events Per Second). Suppose your License is of capturing only 5000 Events per Second and 7000 events arrived at the event collector than 5000 events are processed and remaining 2000 remain at the buffer.

Note : Each Events are counted against the license before Events are coalesced ( Remove duplicate from events )

What happens to the events in Buffer?

When the system goes over its license limit , Burst handling seamlessly start moving events or flow data to a temporary Queue in an attempt to prevent any drop of event . Also a notification is sent to the system administrator informing that the license limit exceed .

As of Q radar 7.2.4 , License limit of Buffer is 5 GB per queue [ eg. syslog, JDBC etc] .Also FIFO method is followed for transfer of events .

The rate at which temporary queue fills and empties depend upon limit of license filter , Magnitude of spike and payload size and other factors .

3) Event Parsing (DSM Parsing)>>

This is a parser that convert RAW events logs from different log sources into human readable Record .

The events are normalized here . Normalization here means the extraction of properties which we use in Q Radar or any custom properties ( that are marked as optimized )

Properties that are include in the Normalization are :

Event ID , Source IP, Destination IP , Source Port , Destination Port , Protocol , Pre- Nat IP/Port, Post-Nat IP/ Port, source or destination mac , machine name etc.

We can also add custom properties .

What happens if the existing Parser (DSM) is not able to parse the events ?

- There are two scenarios that would happen when the DSM parser is not able to recognize the events from a particular Log source .

- For both the cases , The events would show up under the “Un-parsed” filter

- Any event which shows up as anyone of them might not trigger the rules that should have been triggered since Q Radar is not able to recognize them . they are :

- Events are reported as “Stored” under the log activity tab

- Events are reported as UNKNOWN under the log activity tab

When the event shows up as “Stored ”..

This mean that the parsing logic for the associated Log source from which these events are coming is not able to parse out anything from the incoming event.

In other words, the DSM parsing logic is failing for such an event

If you had created the log source manually for this , Recheck the log source configuration Log Source Type and correct it if required

If the Log source was created correctly or the Log source is auto created , then open up a support ticket with IBM

The most probable cause for this is a new event format which we haven’t seen previously . In such a case , IBM would release a new DSM for that Log Source through weekly auto updates to get this fixed .

When the event shows up as UNKNOWN

The event viewer will show events in the “unknown “category when the event name parsed in the event message does not does not match any of the known mapping between device events names and Q Radar Q ID’s and low level categories . This means that even though the DSM is able to parse out the different parameters from the events , the event name (Parsed from the event payload ) does not match any of the existing Q ID’s

This most commonly occurs when using DSM Extensions , which by design will never automatically be mapped to known categories . In these cases , you need to map all the event names parsed out of message from your device to Q radar known categories .

The second scenario where this can occur is when a supported device has newly added message types , Which Q Radar is not aware of . While IBM works to keep these mapping up to date by means of Auto process , we might occasionally still see these messages .

We have the option of going ahead with mapping the vents yourself (which will not be overwritten by the auto update process later if they are added ) , waiting for an update to see if they are then mapped , or logging an issue with IBM Support .

Note that if security team is adding custom event names to supported device , event names that do not come from the third party vendors itself , IBM will not aware of these , and you should go ahead and map these yourself . This is common with Snort , as many customer will add their own signatures and messages .

To remap UNKNOWN events , open the event viewer , and click the “Map Event”

Button at the top of the log activity screen . If the system is able to parse out unique name , you should see this in the “Device event ID ” or “Log Source Event ID” (Depending upon Q Radar Version )

4) Coalescing Filter >>

Events are parsed and then sent to the Coalescing Filter . You can select to enable /Disable Coalescing while creating the Log source . Auto – detected sources have their Coalescing ON. You can edit such a Log source and disable this .

Coalescing mean once 4 events are seen with the same source IP , destination IP , destination Port , username and event type , subsequent message pattern for up to 10seconds of the same pattern are coalesced together and reported as one with the event count representing actual number of such coalesced events .

This is done to reduce duplicate data stored in the background of DB .

Coalescing would not affect event counted against license . License filter comes before coalescing in the event processing pipeline .

5) CRE- Rules Processor (magistrate) >>

The Custom Rule Engine (CRE) is responsible for processing events received by Q Radar and comparing them against defined rules , keeping track of system involved in the incident over time , generating notification to users and generating offenses .

The Q Radar custom rule engine (CRE) runs with ECS, in the “event processor”. The CRE runs on each managed host (16XX,17XX,18XX) and the console (31xx,2100) and monitors data as it comes through the pipeline .

When a single event matches a rule , the rule /response section of the rule is executed , generating a new message , emails , syslog messages , offenses etc. as configured . Events that match rules are tagged with the rule and written to the storage , so that you can search for events matching that rule later .

Rules – what are they?

Rules , also sometimes called as Correlation Rules is one of the most important factors which make Q Radar intelligent . Rules perform tests on events , flows or offenses , and if they all the conditions of a test are met , the rule generate a response which can be in the form of alerts . Rules can also be behavioral in nature too.

By default , there are hundreds of different types of rules that are shipped with Q radar . Most of the attacks like Dos , DDoS , exploit rules are already present in the Q Radar .

In Q Radar , Rules can also generate offenses . Offenses are Security Incident that need attention

7) Ariel storage >>

A time series database for events and flows where data is stored on a minutes by minute basis . Ariel DB us a flat file pre-indexed propriety Database of Q Rdar . The structure of this DB is what makes Q Radar searches fast . Data is stored where the event is processed Remember , that both Consoles , 16XX and 18XX can all process events .

As events comes into your appliance , they are processed by ECS and stored locally on the appliance during the storage phase of ECS .

- Event like system notification etc received by a Console appliance are stored in the Console’s Ariel database .

- Events received by an EC , EP or EP/FP appliance are stored in the appliance local Ariel database

Traffic Analysis>>

Traffic Analysis , also known as Auto Detection , allows Q Radar to auto detect and create new log sources based on incoming data stream

When Q Radar starts receiving data , it sends that data over to traffic analysis engine for auto detection after running them through DSM Parser .

In the data coming is in the form of unrecognized / Unsupported device , Q radar will likely fail auto-detection . Event from that log source will show as UNKNOWN/Stored on the UI

Create Log source manually>>

For Few DSMs we need to create the Log source manually since we do not auto –discover them . You will get a system notification saying that auto –discovery could not auto-discover the log source in such cases .

DSM Guide has information on which Log Sources are auto-discovered and which need manual log source creation .

Offsite Target >>

Q Radar has the ability to forward processed , parsed events to another Q Radar deployment . This is typically used in “Disaster Recovery “ (DR) deployment , where customers want to have a second console /Installation that has a backup copy of production data and it acts as a DR setup.

Event Streaming >>

Responsible for sending real time events data to the console when a user is viewing events from the log source activity tab with real time (Streaming )

Real time streamed events are not picked up from the DB but shown in real time after they are passed through the CRE.

Only when you do historic searched are events picked up from the Ariel DB